The need

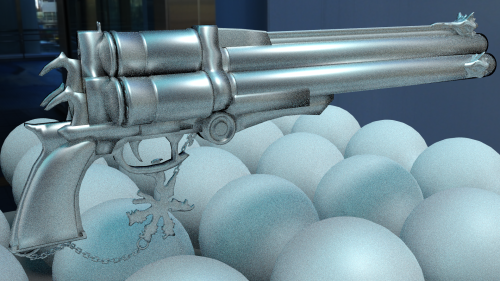

With all the talk about Physically Based Rendering nowadays, it’s important to take a look at the abusive post-processing effects games use today to either enhance the image or mask artifacts and lack of detail. Therefore I think it is important to have a Physically Based Camera Rendering (PBCR) system in games, because to have an image that looks like as if it was taken from a movie, you have to look at it through some lenses.

The ingredients

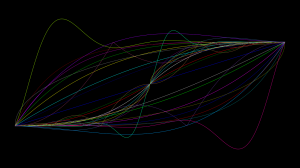

Most (if not all) of today’s AAA games (and even some indie) feature high-quality post effects, such as Depth of Field, Motion Blur, Film Grain, (pseudo) Lens Flares, Bloom, Glare, Vignetting and Chromatic Aberration. Usually you can also adjust the Field of View of the camera you’re observing the world through, and sometimes this is a gameplay feature (think of the sniper rifles).

Of course all this requires a Gamma Correct High Dynamic Range rendering system to be working properly. Pair this with Physically Based Rendering, and you should get really realistic results.

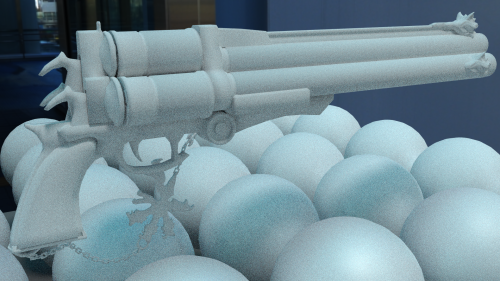

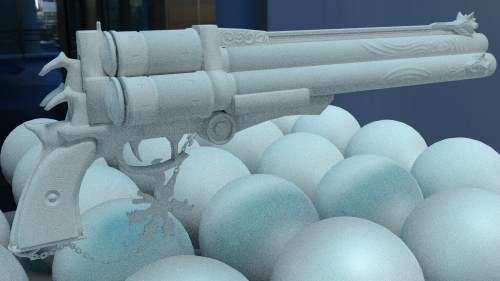

Depth of Field

Depth of Field (or DOF) is an effect that simulates the out-of-focus (blurry) parts of the photographs. http://en.wikipedia.org/wiki/Depth_of_field

Depth of Field

Bokeh Depth of Field refers to the various shapes that are formed when highlights are out-of-focus. This is in my opinion a pretty nice effect, but there are game styles where this is actually counter-productive (like FPSs).

[UPDATE] The Bokeh shape is directly affected by the aperture, as you can see here, on real world cameras you can even create custom shapes (so sprite based bokeh is not all bs), and with some clever blending between the shapes you can create dazzling eye-candy effects (who told you this can’t be a gameplay feature?).

On real world cameras you can control this effect by changing the point where you focus.

To add, you can change your f-stop (http://en.wikipedia.org/wiki/F-number) number (the diameter of your aperture). The bigger the f-stop is, the smaller the aperture diameter becomes, and therefore less light comes through there, so the image’s exposure should decrease. A high f-stop number also means that your camera will increasingly start to look like a pinhole camera (http://en.wikipedia.org/wiki/Pinhole_camera) and therefore more and more of the image should be in focus.

The f-stop number also depends on the focal length (http://en.wikipedia.org/wiki/Focal_length), and focal length directly affects your field-of-view (FOV). Large focal lengths should mean small FOV values. The focal length is a parameter of your lenses, however this is why zoom lenses were invented, you can change the focal length, therefore you essentially zoom in on the image, your field of view becomes smaller, and also your depth of field becomes shallower (see, everything correlates).

There are numerous papers around the internet that discuss how to implement this technique efficiently:

http://dice.se/wp-content/uploads/BF3_NFS_WhiteBarreBrisebois_Siggraph2011.pdf

http://www.crytek.com/download/Sousa_Graphics_Gems_CryENGINE3.pdf

http://bartwronski.com/2014/04/07/bokeh-depth-of-field-going-insane-part-1/

Motion Blur

Motion Blur is an effect that simulates the streaking (or blurring) of objects moving relative to the camera either because of their speed, or the camera’s long exposure. http://en.wikipedia.org/wiki/Motion_blur

Motion Blur

This effect is created because for that camera it actually takes time to record a frame, and in this time objects move, therefore they appear on more than one pixel of the image. Longer exposure times mean that it takes even longer for the camera to record a frame, so objects are more prone to appear blurry.

There are also numberous sources to learn from:

http://john-chapman-graphics.blogspot.hu/2013/01/what-is-motion-blur-motion-pictures-are.html

http://john-chapman-graphics.blogspot.hu/2013/01/what-is-motion-blur-motion-pictures-are.html

http://mynameismjp.wordpress.com/the-museum/samples-tutorials-tools/motion-blur-sample/

http://www.gamedev.net/page/community/iotd/index.html/_/hybrid-motion-blur-r321

Film Grain (or noise)

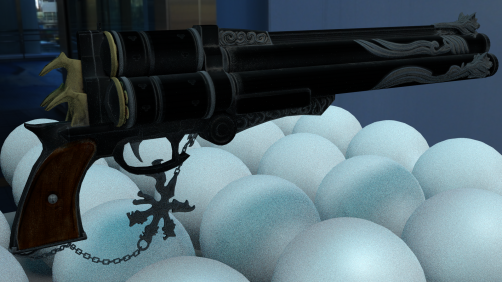

So, suppose you have a perfect image rendered using PBR, but it still doesn’t look right. You may be missing some noise. This dreaded artifact of path tracing systems is actually quite common in real-world screnarios (although it is way more subtle).

Film Grain

In real photographs the images may look noisy due to the fact that the surface that captures the light is actually somewhat sensitive to it. The sensitivity is determined by the ISO speed setting on your camera. Large ISO settings mean that the surface is really sensitive to the light, and therefore you can take a picture even at night, or in a dark room. This comes at a cost though, the image will get noisy.

Here’s a link on how to implement this:

http://devlog-martinsh.blogspot.hu/2013/05/image-imperfections-and-film-grain-post.html

Lens flares

Lens flares are again a nice artifact of real world camera systems. They are formed by light reflecting and scattering around in the lens system of a camera. Sci-fi games usually like to abuse this by putting it every-f-in-where, even if it totally shouldn’t be there. Even JJ Abrams would be jealous of some games. http://en.wikipedia.org/wiki/Lens_flare

Lens Flares

A nice, natural looking lens flare effect though may add a lot to the realism of the scene.

Here’s some links on how to implement it:

http://john-chapman-graphics.blogspot.hu/2013/02/pseudo-lens-flare.html

http://resources.mpi-inf.mpg.de/lensflareRendering/

Bloom

Boom is an effect that produces fringes of light extending from the borders of bright areas in an image. The light kind of bleeds to the dark parts of the image. http://en.wikipedia.org/wiki/Bloom_(shader_effect)

Bloom

Here’s some links on how to implement it:

https://de45xmedrsdbp.cloudfront.net/Resources/files/The_Technology_Behind_the_Elemental_Demo_16x9-1248544805.pdf

https://software.intel.com/en-us/articles/compute-shader-hdr-and-bloom

Glare

While bloom is an artifact of the camera lens system, glare is actually an artifact of your eyes’ lens system. While your eye can adopt to sudden light intensity changes pretty quickly, it’s still not perfect, and therefore you may experience difficulty seeing. http://en.wikipedia.org/wiki/Glare_(vision)

Glare

You can simulate this by implementing eye adoptation, which changes the exposure of the image over time based on the average luminance.

Here’s a paper on this:

http://resources.mpi-inf.mpg.de/hdr/peffects/krawczyk05sccg.pdf

Vignetting

Vignetting in real world camera systems is produced by the lenses blocking light from each other. This also means that the amount of vignetting is dependent on the f-stop number (aperture). http://en.wikipedia.org/wiki/Vignetting

The bigger the f-stop number is, the smaller the aperture diameter will be, and therefore more vignetting will be visible.

Vignetting

Implementation:

http://devlog-martinsh.blogspot.hu/2011/12/glsl-depth-of-field-with-bokeh-v24.html

http://qctwit.blogspot.hu/2012/01/glsl-vignette-and-alpha.html

Chromatic aberration

Chromatic aberration is an effect when the lens system fails to focus all colors to the same place. This results in fringes where there are large light intensity differences in an image. http://en.wikipedia.org/wiki/Chromatic_aberration

So probably when you implement it you should experiment with luminance based edge detection filters, so that only the large contrast areas will be affected.

Chromatic Aberration

Implementation:

http://gamedev.stackexchange.com/questions/58408/how-would-you-implement-chromatic-aberration

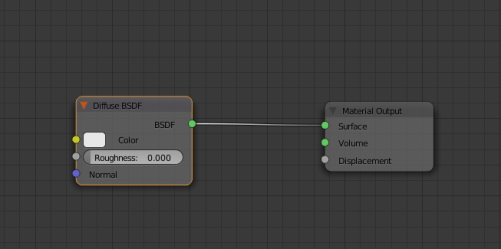

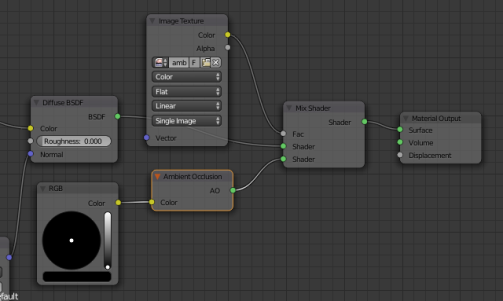

Putting it all together

So after you’ve implemented all of these effects, it’s time to link the parameters together, so that all of them work in a system simulating an actual camera.

Here’s an impressive demo that showcases this:

http://dl8.me/camera/client/

Remember that sometimes less is more, most of these effect are normally really subtle, so make sure you don’t go overboard.

[UPDATE] Just a small addition to the implementation part: the rendering order matters. So, suppose you have your fully lit scene, along with subsurface scattering and reflections blended on top. Then you do these effects in the following order:

1) Depth of Field

2) Motion blur (note that you can do these independently and mix them later as shown here, but quality suffers a bit)

3) Bloom rendering (input: motion blur output)

The usual bright pass, etc. plus eye adoptation!

4) Lens flare rendering (input: bright pass output)

5) Combine the output of the motion blur pass, lens flares, bloom. Do the vignetting here, on the combined color. Do tone mapping and gamma correction here on the vignetted color.

6) Render chromatic aberration and film grain here (so after tonemapping, in gamma space)

7) Post processing AA comes here (eg. SMAA)

If you still didn’t quite understand all the theory behind all this (especially the real world part), then I recommend you read through this website:

http://www.cambridgeincolour.com/learn-photography-concepts.htm